How to setup AWS EBS Persistent Volumes for AWS EKS workloads

Here in this article we will try to setup a AWS EKS cluster and dynamically provision AWS EBS volumes using AWS EBS Storage driver for the Kubernetes workloads installed on the Cluster.

If you are interested in watching the video. Here is the YouTube video on the same step by step procedure outlined below.

Procedure

Step1: Ensure AWS CLI installed and configured

As a first step we need to ensure that we have the AWS CLI installed and configured on our workstation from where we want to manage the AWS servers. Here are the instructions for the same.

Install AWS CLI

$ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

$ unzip awscliv2.zip

$ sudo ./aws/install

Configure AWS CLI

$ aws configure

AWS Access Key ID [None]: xxx

AWS Secret Access Key [None]: xxx

Default region name [None]: us-east-1

Default output format [None]: json

Step2: Ensure kubectl installed

kubectl is the cli tool used to manage Kubernetes objects within the kubernetes cluster.

Install kubectl

$ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

$ sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

Validate kubectl version

$ kubectl version --client

Client Version: v1.32.1

Kustomize Version: v5.5.0

Step3: Ensure AWS eksctl installed

The eksctl command lets you create and modify Amazon EKS clusters.

Install eksctl

$ ARCH=amd64

$ PLATFORM=$(uname -s)_$ARCH

$ curl -sLO "https://github.com/eksctl-io/eksctl/releases/latest/download/eksctl_$PLATFORM.tar.gz"

$ tar -xzf eksctl_$PLATFORM.tar.gz -C /tmp && rm eksctl_$PLATFORM.tar.gz

$ sudo mv /tmp/eksctl /usr/local/bin

Validate eksctl version

$ eksctl version

0.202.0

Step4: Create cluster with AWS EC2 instance managed nodes

Here we are going to create AWS EKS cluster with AWS EC2 instances as managed worker nodes for the hosting the kubernetes workloads.

This command is going to create two cloudformation templates to provision the cluster itself and the initial managed nodegroup consisting of two ec2 instances.

$ eksctl create cluster --name kubestack --region us-east-1

Output:

2025-01-25 06:17:23 [ℹ] eksctl version 0.202.0

2025-01-25 06:17:23 [ℹ] using region us-east-1

2025-01-25 06:17:24 [ℹ] setting availability zones to [us-east-1a us-east-1f]

2025-01-25 06:17:24 [ℹ] subnets for us-east-1a - public:192.168.0.0/19 private:192.168.64.0/19

2025-01-25 06:17:24 [ℹ] subnets for us-east-1f - public:192.168.32.0/19 private:192.168.96.0/19

2025-01-25 06:17:24 [ℹ] nodegroup "ng-8dc48fbf" will use "" [AmazonLinux2/1.30]

2025-01-25 06:17:24 [ℹ] using Kubernetes version 1.30

2025-01-25 06:17:24 [ℹ] creating EKS cluster "kubestack" in "us-east-1" region with managed nodes

2025-01-25 06:17:24 [ℹ] will create 2 separate CloudFormation stacks for cluster itself and the initial managed nodegroup

2025-01-25 06:17:24 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=us-east-1 --cluster=kubestack'

2025-01-25 06:17:24 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "kubestack" in "us-east-1"

2025-01-25 06:17:24 [ℹ] CloudWatch logging will not be enabled for cluster "kubestack" in "us-east-1"

2025-01-25 06:17:24 [ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=us-east-1 --cluster=kubestack'

2025-01-25 06:17:24 [ℹ] default addons metrics-server, vpc-cni, kube-proxy, coredns were not specified, will install them as EKS addons

2025-01-25 06:17:24 [ℹ]

2 sequential tasks: { create cluster control plane "kubestack",

2 sequential sub-tasks: {

2 sequential sub-tasks: {

1 task: { create addons },

wait for control plane to become ready,

},

create managed nodegroup "ng-8dc48fbf",

}

}

2025-01-25 06:17:24 [ℹ] building cluster stack "eksctl-kubestack-cluster"

2025-01-25 06:17:26 [ℹ] deploying stack "eksctl-kubestack-cluster"

2025-01-25 06:17:56 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:18:27 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:19:29 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:20:30 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:21:31 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:22:32 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:23:33 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:24:34 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:25:35 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-cluster"

2025-01-25 06:25:40 [ℹ] creating addon

2025-01-25 06:25:41 [ℹ] successfully created addon

2025-01-25 06:25:42 [!] recommended policies were found for "vpc-cni" addon, but since OIDC is disabled on the cluster, eksctl cannot configure the requested permissions; the recommended way to provide IAM permissions for "vpc-cni" addon is via pod identity associations; after addon creation is completed, add all recommended policies to the config file, under `addon.PodIdentityAssociations`, and run `eksctl update addon`

2025-01-25 06:25:42 [ℹ] creating addon

2025-01-25 06:25:43 [ℹ] successfully created addon

2025-01-25 06:25:43 [ℹ] creating addon

2025-01-25 06:25:44 [ℹ] successfully created addon

2025-01-25 06:25:45 [ℹ] creating addon

2025-01-25 06:25:45 [ℹ] successfully created addon

2025-01-25 06:27:52 [ℹ] building managed nodegroup stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 06:27:54 [ℹ] deploying stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 06:27:54 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 06:28:25 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 06:29:14 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 06:30:47 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 06:30:48 [ℹ] waiting for the control plane to become ready

2025-01-25 06:30:48 [✔] saved kubeconfig as "/home/admin/.kube/config"

2025-01-25 06:30:48 [ℹ] no tasks

2025-01-25 06:30:48 [✔] all EKS cluster resources for "kubestack" have been created

2025-01-25 06:30:50 [ℹ] nodegroup "ng-8dc48fbf" has 2 node(s)

2025-01-25 06:30:50 [ℹ] node "ip-192-168-19-23.ec2.internal" is ready

2025-01-25 06:30:50 [ℹ] node "ip-192-168-37-43.ec2.internal" is ready

2025-01-25 06:30:50 [ℹ] waiting for at least 2 node(s) to become ready in "ng-8dc48fbf"

2025-01-25 06:30:50 [ℹ] nodegroup "ng-8dc48fbf" has 2 node(s)

2025-01-25 06:30:50 [ℹ] node "ip-192-168-19-23.ec2.internal" is ready

2025-01-25 06:30:50 [ℹ] node "ip-192-168-37-43.ec2.internal" is ready

2025-01-25 06:30:50 [✔] created 1 managed nodegroup(s) in cluster "kubestack"

2025-01-25 06:30:52 [ℹ] kubectl command should work with "/home/admin/.kube/config", try 'kubectl get nodes'

2025-01-25 06:30:52 [✔] EKS cluster "kubestack" in "us-east-1" region is ready

Validate Nodes status

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-192-168-19-23.ec2.internal Ready <none> 4m18s v1.30.8-eks-aeac579 192.168.19.23 54.173.212.79 Amazon Linux 2 5.10.230-223.885.amzn2.x86_64 containerd://1.7.23

ip-192-168-37-43.ec2.internal Ready <none> 4m17s v1.30.8-eks-aeac579 192.168.37.43 98.84.8.31 Amazon Linux 2 5.10.230-223.885.amzn2.x86_64 containerd://1.7.23

Validate Pods status

$ kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system aws-node-gg7kf 2/2 Running 0 4m53s 192.168.19.23 ip-192-168-19-23.ec2.internal <none> <none>

kube-system aws-node-mspl5 2/2 Running 0 4m52s 192.168.37.43 ip-192-168-37-43.ec2.internal <none> <none>

kube-system coredns-586b798467-kvjc7 1/1 Running 0 8m41s 192.168.48.179 ip-192-168-37-43.ec2.internal <none> <none>

kube-system coredns-586b798467-m2xlg 1/1 Running 0 8m41s 192.168.8.32 ip-192-168-19-23.ec2.internal <none> <none>

kube-system kube-proxy-bt247 1/1 Running 0 4m53s 192.168.19.23 ip-192-168-19-23.ec2.internal <none> <none>

kube-system kube-proxy-fqz7f 1/1 Running 0 4m52s 192.168.37.43 ip-192-168-37-43.ec2.internal <none> <none>

kube-system metrics-server-9fbbd6f84-64xjb 1/1 Running 0 8m45s 192.168.27.8 ip-192-168-19-23.ec2.internal <none> <none>

kube-system metrics-server-9fbbd6f84-rtp9r 1/1 Running 0 8m45s 192.168.28.190 ip-192-168-19-23.ec2.internal <none> <none>

Step5: Install AWS EBS CSI Driver

We will use helm package manager to install the AWS EBS CSI Driver.

Install Helm package manager

$ sudo dnf install helm

Add Helm repo

$ helm repo add aws-ebs-csi-driver https://kubernetes-sigs.github.io/aws-ebs-csi-driver

"aws-ebs-csi-driver" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aws-ebs-csi-driver" chart repository

Update Complete. ⎈Happy Helming!⎈

Install AWS EBS CSI Driver package

$ helm upgrade --install aws-ebs-csi-driver \

--namespace kube-system \

aws-ebs-csi-driver/aws-ebs-csi-driver

Release "aws-ebs-csi-driver" does not exist. Installing it now.

NAME: aws-ebs-csi-driver

LAST DEPLOYED: Sat Jan 25 06:48:01 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

NOTES:

To verify that aws-ebs-csi-driver has started, run:

kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-ebs-csi-driver,app.kubernetes.io/instance=aws-ebs-csi-driver"

[ACTION REQUIRED] Update to the EBS CSI Driver IAM Policy

Due to an upcoming change in handling of IAM polices for the CreateVolume API when creating a volume from an EBS snapshot, a change to your EBS CSI Driver policy may be needed. For more information and remediation steps, see GitHub issue #2190 (https://github.com/kubernetes-sigs/aws-ebs-csi-driver/issues/2190). This change affects all versions of the EBS CSI Driver and action may be required even on clusters where the driver is not upgraded.

Verify CSI controller and node pods status

$ kubectl get pods -n kube-system -l app.kubernetes.io/name=aws-ebs-csi-driver

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-8574568c56-6mxn2 5/5 Running 0 5m7s

ebs-csi-controller-8574568c56-gmj6h 5/5 Running 0 5m7s

ebs-csi-node-dllbx 3/3 Running 0 5m7s

ebs-csi-node-mzbjb 3/3 Running 0 5m7s

Verify logs for controller and node pods

$ kubectl logs ebs-csi-controller-8574568c56-6mxn2 -n kube-system

Defaulted container "ebs-plugin" out of: ebs-plugin, csi-provisioner, csi-attacher, csi-resizer, liveness-probe

I0125 01:18:19.128118 1 main.go:153] "Initializing metadata"

I0125 01:18:19.131672 1 metadata.go:48] "Retrieved metadata from IMDS"

I0125 01:18:19.132352 1 driver.go:69] "Driver Information" Driver="ebs.csi.aws.com" Version="v1.39.0"

$ kubectl logs ebs-csi-node-dllbx -n kube-system

Defaulted container "ebs-plugin" out of: ebs-plugin, node-driver-registrar, liveness-probe

I0125 01:18:19.119036 1 main.go:153] "Initializing metadata"

I0125 01:18:19.123888 1 metadata.go:48] "Retrieved metadata from IMDS"

I0125 01:18:19.124781 1 driver.go:69] "Driver Information" Driver="ebs.csi.aws.com" Version="v1.39.0"

E0125 01:18:20.141543 1 node.go:855] "Unexpected failure when attempting to remove node taint(s)" err="isAllocatableSet: driver not found on node ip-192-168-19-23.ec2.internal"

E0125 01:18:20.651192 1 node.go:855] "Unexpected failure when attempting to remove node taint(s)" err="isAllocatableSet: driver not found on node ip-192-168-19-23.ec2.internal"

I0125 01:18:21.660968 1 node.go:935] "CSINode Allocatable value is set" nodeName="ip-192-168-19-23.ec2.internal" count=25

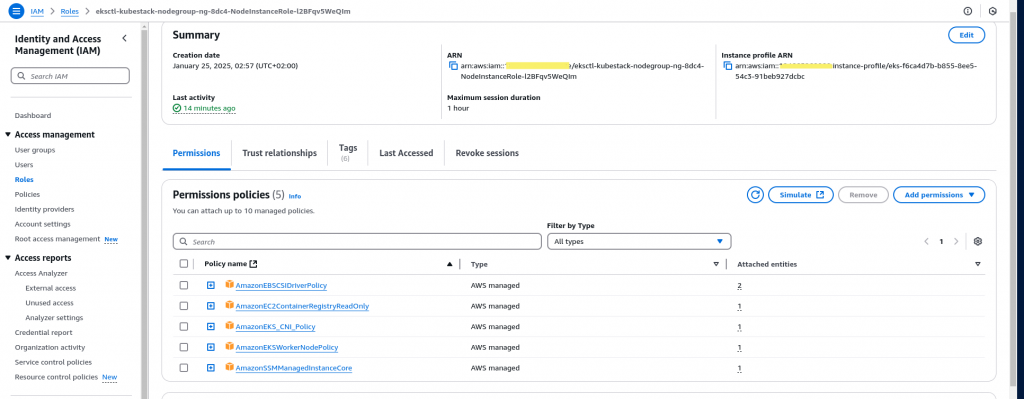

Step6: Update node role with AmazonEBSCSIDriverPolicy Policy

Here we are going to update the node role with AmazonEBSCSIDriverPolicy policy to allow AWS EC2 service to trigger AWS EBS volume provisioning request. Here is the screenshot of the updated role as shown below.

Step8: Apply Dynamic provisioning manifests

Now we will try to take sample example to provision the EBS volume dynamically and utilize it as the volume store for the kubernetes pod.

Let’s clone the repository and apply the manifests for dynamic-provisioning as shown below.

$ git clone https://github.com/kubernetes-sigs/aws-ebs-csi-driver.git

$ cd aws-ebs-csi-driver/examples/kubernetes/dynamic-provisioning

$ kubectl apply -f manifests/

Step9: Validate provisioning of Volumes

Here we will validate the pvc status as shown below.

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

ebs-claim Bound pvc-f1d4fcd6-db37-4c87-874b-d0c1c61a2633 4Gi RWO ebs-sc <unset> 22s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

app 1/1 Running 0 26s

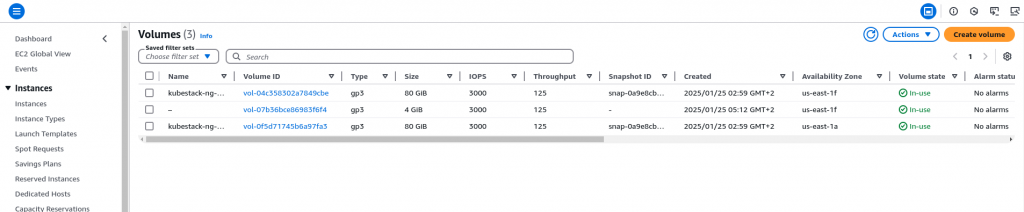

We can also check the EBS volume that is created under the AWS EC2 service as shown in the screenshot below.

$ kubectl exec app -- cat /data/out.txt

...

Sat Jan 25 03:17:48 UTC 2025

Sat Jan 25 03:17:53 UTC 2025

Sat Jan 25 03:17:58 UTC 2025

Sat Jan 25 03:18:03 UTC 2025

Step10: Delete EKS Cluster

Once you are statisfied with the above demo you can delete your cluster and remove any unnecessary IAM roles from AWS console and avoid any charges.

$ eksctl delete cluster --name kubestack --region us-east-1

Output:

2025-01-25 08:51:28 [ℹ] deleting EKS cluster "kubestack"

2025-01-25 08:51:30 [ℹ] will drain 0 unmanaged nodegroup(s) in cluster "kubestack"

2025-01-25 08:51:30 [ℹ] starting parallel draining, max in-flight of 1

2025-01-25 08:51:32 [ℹ] deleted 0 Fargate profile(s)

2025-01-25 08:51:35 [✔] kubeconfig has been updated

2025-01-25 08:51:35 [ℹ] cleaning up AWS load balancers created by Kubernetes objects of Kind Service or Ingress

2025-01-25 08:51:42 [ℹ]

3 sequential tasks: { delete nodegroup "ng-8dc48fbf",

2 sequential sub-tasks: {

2 sequential sub-tasks: {

delete IAM role for serviceaccount "kube-system/ebs-csi-controller-sa",

delete serviceaccount "kube-system/ebs-csi-controller-sa",

},

delete IAM OIDC provider,

}, delete cluster control plane "kubestack" [async]

}

2025-01-25 08:51:42 [ℹ] will delete stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:51:42 [ℹ] waiting for stack "eksctl-kubestack-nodegroup-ng-8dc48fbf" to get deleted

2025-01-25 08:51:42 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:52:14 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:52:46 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:53:23 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:55:12 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:55:44 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:57:13 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:59:09 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 08:59:44 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 09:01:34 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-nodegroup-ng-8dc48fbf"

2025-01-25 09:01:34 [ℹ] will delete stack "eksctl-kubestack-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa"

2025-01-25 09:01:34 [ℹ] waiting for stack "eksctl-kubestack-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa" to get deleted

2025-01-25 09:01:34 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa"

2025-01-25 09:02:06 [ℹ] waiting for CloudFormation stack "eksctl-kubestack-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa"

2025-01-25 09:02:07 [ℹ] serviceaccount "kube-system/ebs-csi-controller-sa" was not created by eksctl; will not be deleted

2025-01-25 09:02:08 [ℹ] will delete stack "eksctl-kubestack-cluster"

2025-01-25 09:02:10 [✔] all cluster resources were deleted

Hope you enjoyed reading this article. Thank you..

Leave a Reply

You must be logged in to post a comment.